Controlling the data traffic

Before data can be ‘made transparent’, however, it first needs to be streamlined and collected in a clear and consistent way. “The role of a data platform is to enable the gathering of insights from data and helping users to make better-informed decisions. How? By collecting and harmonizing data from various sources within the company or organization(s),” explains delaware’s data platform lead Sebastiaan Leysen. “This includes structured as well as unstructured data, big data, small data sets, etc. Additionally, the platform should enable individual applications within or outside an organization to communicate in real time.”

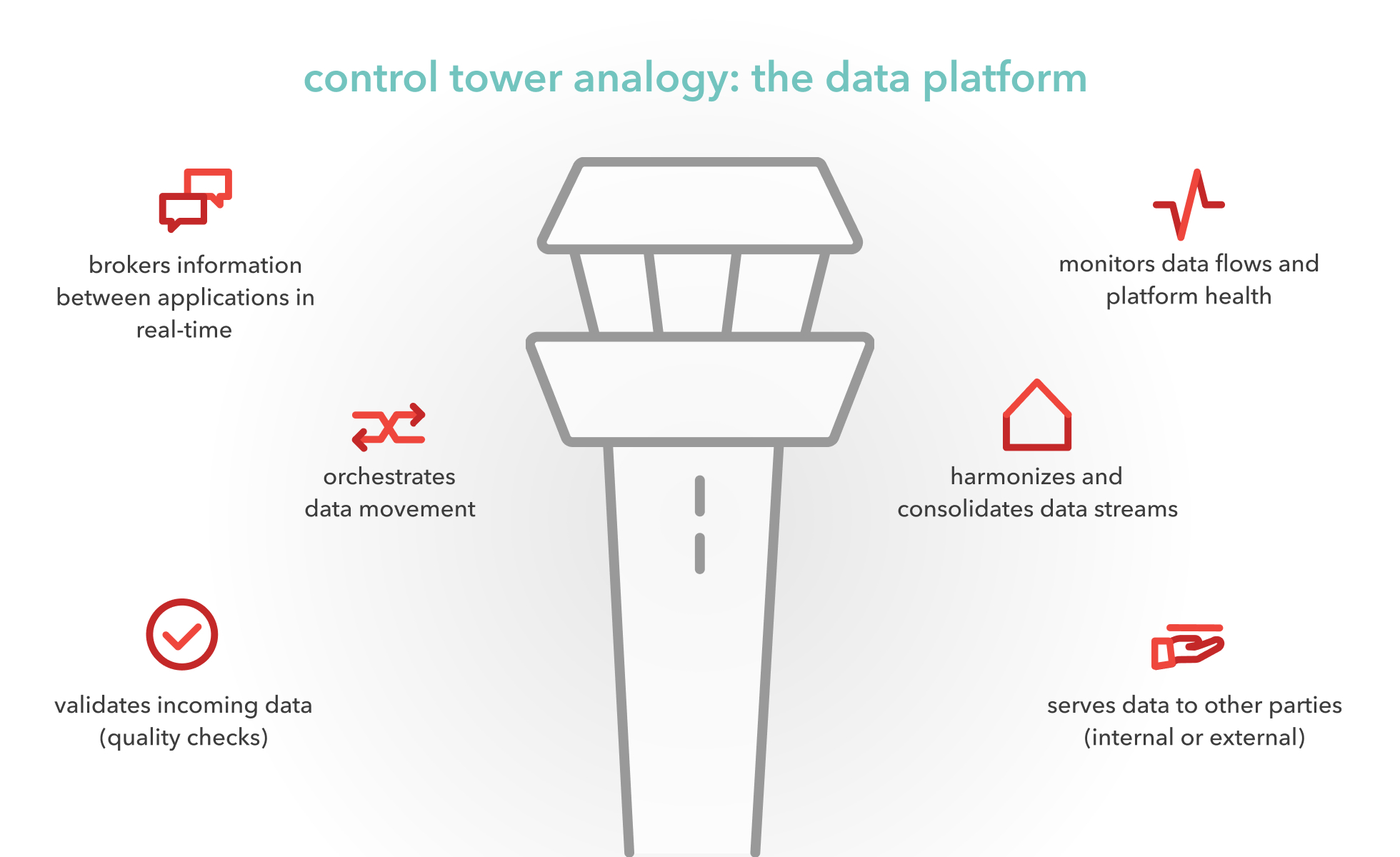

Sebastiaan uses the analogy of an air traffic control tower: “You could compare an organization’s business applications – like ERP, CRM, HR-platforms, etc – to airplanes. All of them communicate with the control tower in near real-time, often via an event-driven paradigm, to exchange necessary process information with each other. The ‘tower’ is the data platform that brokers the information among the airplanes, orchestrates data movements, validates incoming data, monitors data flows, harmonizes and consolidates data streams and serves the data to other, internal and/or external parties.”

/SAP_Datasphere-banner-3200x500-(1).webp?mode=autocrop&w=320&h=240&attachmenthistoryguid=e75c0bc9-f070-4a3b-bd55-510acf52e029&v=&focusX=2297&focusY=222&c=67f265651ba6a280ace30005e4d31361ec19fae8bdfdf0c003ddb9740367ea0e)

/Office_Ghent_2022-(121).webp?mode=autocrop&w=320&h=240&attachmenthistoryguid=3cef01b3-8390-43a9-80dc-3f68a8775c5b&v=&focusX=1600&focusY=1201&c=de441a92311f30fc447560f19aebf8d9a751fc291490526931fa64c66d7f6d6c)